Differentiable surrogate functions for optimizing ROC curves

I’m a first-semester graduate student at Northern Arizona University, enrolled in the accelerated masters program for computer science. My journey through college has been pretty fast-paced, and this one year program continues that trend. The accelerated masters is only available for the non-thesis degree option, so instead of writing a thesis I’m working in the Machine Learning Research Lab for both semesters and taking a few courses. This is a brief overview of my project for this semester.

Maximizing the area under a ROC curve (AUC) can be useful for training models targeting binary classification and changepoint detection problems when your train data is highly imbalanced. One example is Maximizing AUC with Deep Learning for Classification of Imbalanced Mammogram Datasets, which chose to maximize AUC instead of using a standard loss function in their neural network. Since ROC curves can be very rough and sometimes non-convex, calculating the exact area underneath it can be challenging, so we use differentiable surrogate loss functions in place of exact AUC to approximate. Since these functions are differentiable, we can calculate the gradient while traversing them to figure out where their local minimum value is.

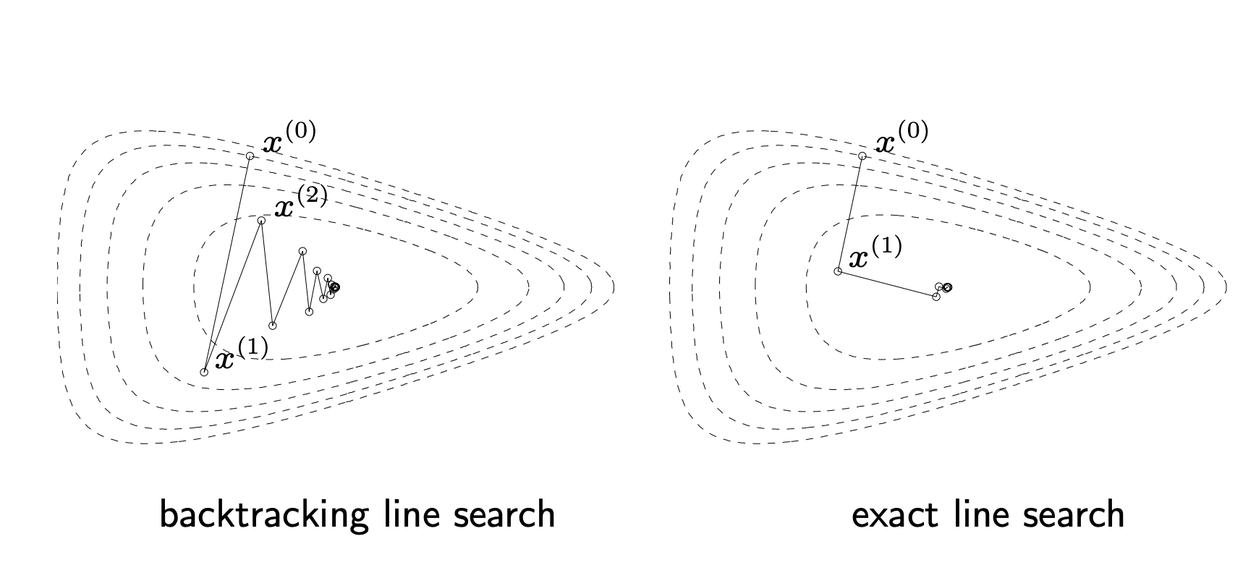

The major components of my research have been a literature review on methods for maximizing the AUC, and working on a surrogate function for AUC called Area Under Min (AUM) that is equipped to tackle non-standard ROC curves (where AUC > 1). Minimizing AUM correlates with maximizing AUC, and we can train our model to have a small AUM using gradient descent. The first paper was written by the director of the ML lab, Toby, and I’m helping on another paper that implements a new line search algorithm for optimizing AUM that will hopefully be more performant than a grid search or backtracking line search. This will end up being included in Toby’s aum package which is written in C++ & R.

I’ve been working on this algorithm all semester and finally got everything working on my test data last Thursday. I still need to build experiments to ensure it’s running in log-linear time, but the hardest part is complete! This has been a super interesting experience, and I’m excited to continue it next semester. Who knew convex optimizations could be so fun?!